Joanna Alphonso:

Today’s technological advances, intended to benefit humanity, have a significant capability to harm every one of us, especially as long as they remain legally unbridled. Welcome to the world of artificial intelligence (AI).

What is AI?

AI makes it possible for computers to learn from experience based on inputs of its human users. It has exploded over the last year due to its ability to generate various texts, generate research, and generate images. For example, images of Pope Francis surfaced in early 2023 nicknamed “Balenciaga Pope,” “Puffer Pontiff,” and “Vicar of Drip,” which many assumed to be real without a second thought. Using the right software, they were easily created with a prompt into AI software, like “show me the pope in a puffer jacket.”

“Balenciaga Pope” AI-generated image that surfaced in early 2023. Via SBS News.

According to AI software developer SAS, the term AI was first coined in 1956 when initial explorations of problem solving and symbolic methods were taking place. The U.S. Department of Defense took this concept and “began training computers to mimic basic human reasoning” in the 1960s. This road led to street mapping projects in the ‘70s, further learning projects in the ‘80s and eventually to virtual assistants in 2003. These virtual assistants would then become known as Siri, Cortana, Alexa, and others that are now built into various social media platforms like Facebook, X (formerly Twitter), WhatsApp, Instagram, and more. Today, we have generative AI at our fingertips in the virtual world.

What is AI capable of doing?

Many students use AI software to generate essays for classes instead of writing them themselves. Some scientific press releases have been generated by AI from human-conducted and published articles. iPhone users have probably used Siri to verbally command their phones to look for a recipe, song lyrics, or even for directions and dialing a friend hands-free while driving.

SAS says that AI adapts itself through advanced progressive learning algorithms so that it can acquire skills, just like a human. “It can teach itself to play chess, or teach itself what product to recommend next online.” It observes every stroke of input that a human makes into its software to better understand, learn, predict, and generate higher-quality outputs. It also gathers data from online, regardless of whether it’s free to use or protected by copyright, to generate a written text or image, like the aforementioned “Puffer Pontiff.” Although it is now known to be clearly inaccurate, there was a time when people assumed it to be true. This leads to the dark side of AI, like generating AI pornography.

AI… Pornography?

Imagine that one day, your co-worker taps you on the shoulder and beckons you to come to another coworker’s computer. When you get there, there’s a crowd of your coworkers gathered around the computer. They’re watching a video of you performing sexual acts … except, it’s not you. Well, it’s your face, but the body isn’t yours, and you certainly haven’t “performed” pornography, let alone consented to it being filmed and posted online.

That’s what happened to 28-year-old Kate back in 2019, as reported by HuffPost. It looked so real and even identified Kate by her name. This was a doctored video, called a “deepfake,” which took Kate’s face and manipulated it over the face of a pornography actress in a likely already existing video.

Similar incidents have happened to many others, a vast majority being women (names changed for privacy). “Once something is uploaded it can never really get deleted. It will just be reposted forever,” says Tina, a 24-year-old Canadian victim of nonconsensual deepfake pornography, when she was interviewed by HuffPost. “It felt gross to see my face where it shouldn’t be.” HuffPost also reported that 29-year-old Maya had been receiving a lot of messages from strangers requesting sex. She found out not long after that her face was used in deepfake pornography.

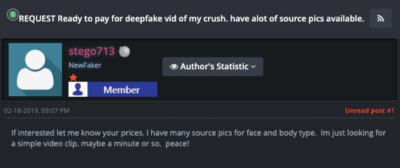

HuffPost reported that there are easy-to-use deepfake generator apps used in conjunction with photo search engines that allow people to upload any image to find a pornography actress with similar features to easily face swap and watch. Paid forums, like the one above (unnamed by HuffPost) exist as well for “more professional-looking videos of specific women, and share links to the women’s social media profiles for source imagery.” Easy targets on platforms like these are female Twitch streamers, YouTubers, and Instagram influencers, as well as the co-workers, friends, and exes of the requesters.

Anonymous paid request made online for deepfake pornography of their ‘crush,’ Via HuffPost.

One creator that the HuffPost was able to contact claimed to be “a 25-year-old Greek man, and ‘one of the first guys’ to make deepfake pornography.” His videos had gained over 300,000 views at the time that the article was written. The prices of these videos can range from $15-$40 per video, usually paid by cryptocurrencies like bitcoin (instead of PayPal) to protect the privacy of the requesters. Ironic – privacy of the requesters and makers are preserved in order to violate the privacy and dignity of their victims.

“Women can tell men, ‘‘I don’t want to date you, I don’t want to know you, I don’t want to take my clothes off for you,’” said Mary Anne Franks, president of the Cyber Civil Rights Initiative (CCRI), “but now men can say, ‘Oh yeah? I’m going to force you to, and if I can’t do it physically, I will do it virtually. There’s nothing you can really do to protect yourself except not exist online.”

AI has been used to generate child pornography according to Fight the New Drug (FTND). FTND is an organization “that exists to provide individuals the opportunity to make an informed decision regarding pornography by raising awareness on its harmful effects using only science, facts, and personal accounts.” Their goal is to “decrease the demand for sexual exploitation through education while helping individuals live empowered lives free from the harmful effects of pornography.”

FTND reported that AI accelerated the rise of deepfakes on mainstream pornography sites and that these sites refuse to take these non-consensual videos seriously. Technology magazine Wired reported a major flood of such videos, racking up millions of views.

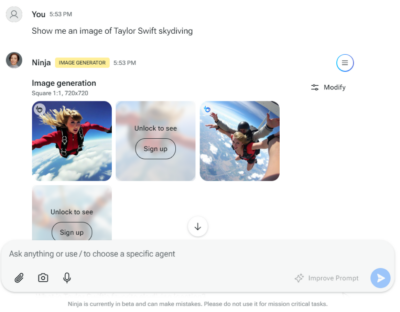

Screenshot of how easy it is to generate an AI image. Generated by myninja.ai.

AI has been used to make pornography most commonly of female celebrities like Natalie Portman, Billie Eilish, Taylor Swift, and Jenna Ortega. Taylor Swift had AI images of herself flooded on X, gaining over “45 million views, 24,000 reposts, and hundreds of thousands of likes,” according to The Verge. Such images are as easy to generate with AI as generating the “Puffer Pontiff.”

Deadline reported in August 2024 that Ortega deleted her X account after receiving AI-generated sexual images of herself as a child. According to the X terms of service, adult content is allowed as long as it is “consensually produced, provided it’s properly labeled, and not prominently displayed” – which the AI-generated images are not.

What recourse do victims have?

Carrie Goldberg, attorney specializing in sexual privacy, told HuffPost, “Deepfake websites exist to monetize people’s humiliation. As disappointing and sobering as it is, there aren’t a lot of options for victims.”

This reality rings true in Canada because criminal laws relating to the creation and distribution of deepfake or AI pornography simply do not exist. “The current state of the law is therefore that deepfake pornography is unlawful in some circumstances, but not in others,” says Canadian criminal lawyer Aitken Robertson. “While it is not currently a criminal act to possess deepfake pornography, the publication of such material, particularly when it depicts activity deemed to be obscene or when the material is used for an unlawful purpose, may result in criminal charges.”

The legal definition of child pornography doesn’t limit itself to real images and can be applied to any depictions of any persons under the age of 18, including AI-generated images. Robertson said that those who “possess, access, distribute, or create such material may be subject to the same criminal liability as if the depictions were real.”

Although AI pornography is relatively new, Steven Larouche of Quebec pleaded guilty in April 2023 of creating at least seven AI-generated child pornography videos. According to the National Post he admitted that he used deepfake technology to superimpose the face of one individual onto the body of another person.”

The Ontario Bar Association noted in June 2023 that in civil cases, the provinces of Alberta, British Columbia, Manitoba, Nova Scotia, Newfoundland & Labrador, and Saskatchewan have made laws allowing for a right of civil action for those who have had their private images distributed without consent. Ontarians are still left to common law remedies, like the torts of public disclosure of private facts, defamation, and intentional infliction of emotional distress.

British Columbia enacted the Intimate Images Protection Act in January 2024, which directly addressed the use of technologies to alter and generate images to produce deepfakes. This law is the first of its kind throughout Canada.

“But it’s victimless!”

FTND explored the idea that AI-pornography is the ethical alternative to real pornography, a vast majority of which exists from the recording and publishing of the rapes of sex trafficking victims. A company, renamed by FTND as “Nonexistent Nudes” for the article, advertises selling AI-generated images of nude women for one dollar each. The idea behind this is that because there are no victims in the production of these images because none of them exist in real life, although they look real, this porn is ethical.

FTND slammed this idea by writing that “more nude images don’t remove the motivations of perpetrators of image-based sexual abuse who upload and share nonconsensual sexual images for a whole host of reasons: control, attention-seeking, entitlement, humiliation, or to build up social standing.” It continues, “they also don’t solve the problem of objectification. Treating a person as if they are an object or a “means to an end” can lead to feelings of inadequacy, anxiety, and depression.” FTND takes the position that AI-generated pornography is as unethical as real pornography.

The very fact that AI generates results by pulling images from various online sources, without regard for privacy or copyright, means that there are victims by the very nature of AI software. It combines faces and body parts of real people to make a Frankenstein for sexual entertainment. Those people whose images have been taken for this purpose are being violated intimately, whether they are aware of it or not.

What can the law do about it

?

Although the current laws allow for some recourse for victims, the fast-paced development of such technologies and software will vastly outpace laws due to the slow nature of our legislative process. It may, however, be reasonable to think that governments can do something about this as quickly as they passed and enacted emergency legislation during the COVID-19 pandemic. The Canadian Parliament passed and enacted the COVID-19 Emergency Response Act in just two days. The misuse of AI for abuse — particularly of women — is arguably on par with an emergency situation because it exponentially perpetuates a culture of gender-based violence. MPs should be contacted with concern over AI-generated porn to push forward legislation criminalizing it, with a penalty that reflects the shattered dignities of its countless victims.

FTND published some steps to take for victims of deepfakes. They suggest contacting online organizations like the CCRI, hiring a lawyer and going to law enforcement, keeping evidence of the abuse, finding help for psychological effects of the abuse, reminding victims that it isn’t their fault, focusing on what victims do have control over, and taking precautions in the future. They warn readers that “safe” messaging apps could easily get hacked, or worse, the terms of service may violate privacy of messages and posts.

The broad use of AI gives rise to even a simple selfie being used for such purposes. Precautions like limiting or ceasing posts online of yourself and loved ones and deleting existing posts may help avoid this, although it is difficult to truly remove all traces of anything posted on the internet.

Every Pixel Journal reported that this year, over 34 million images were produced by AI per day and over 15 billion images were created using text-to-image algorithms from 2022-2023. Vice reported in 2022 that “AI is probably using your images and it’s not easy to opt out.”

Every person is made in the image and likeness of God. Pornography itself is an abomination of this and a violation of human dignity. The use, especially non-consensual, of people’s images to generate deepfake and AI pornography is deplorable. Everyone should be aware of this reality, especially because of situations like Kate, Tina, Maya’s stories. “Real connection starts with seeing others as whole people with unique thoughts, feelings, dreams, struggles, and lives,” wrote FTND. “Viewing people as products is harmful to individuals, relationships, and, ultimately, society as a whole.”